People running Instagram accounts for AI-generated influencers and nude models are downloading popular Instagram reels of real models and sex workers, deepfaking the AI model’s face onto them, and then using the altered videos to promote paid subscriptions to the AI-generated model’s accounts on OnlyFans competitor sites. In some cases, these AI-generated models have amassed hundreds of thousands of Instagram followers using almost exclusively stolen content.

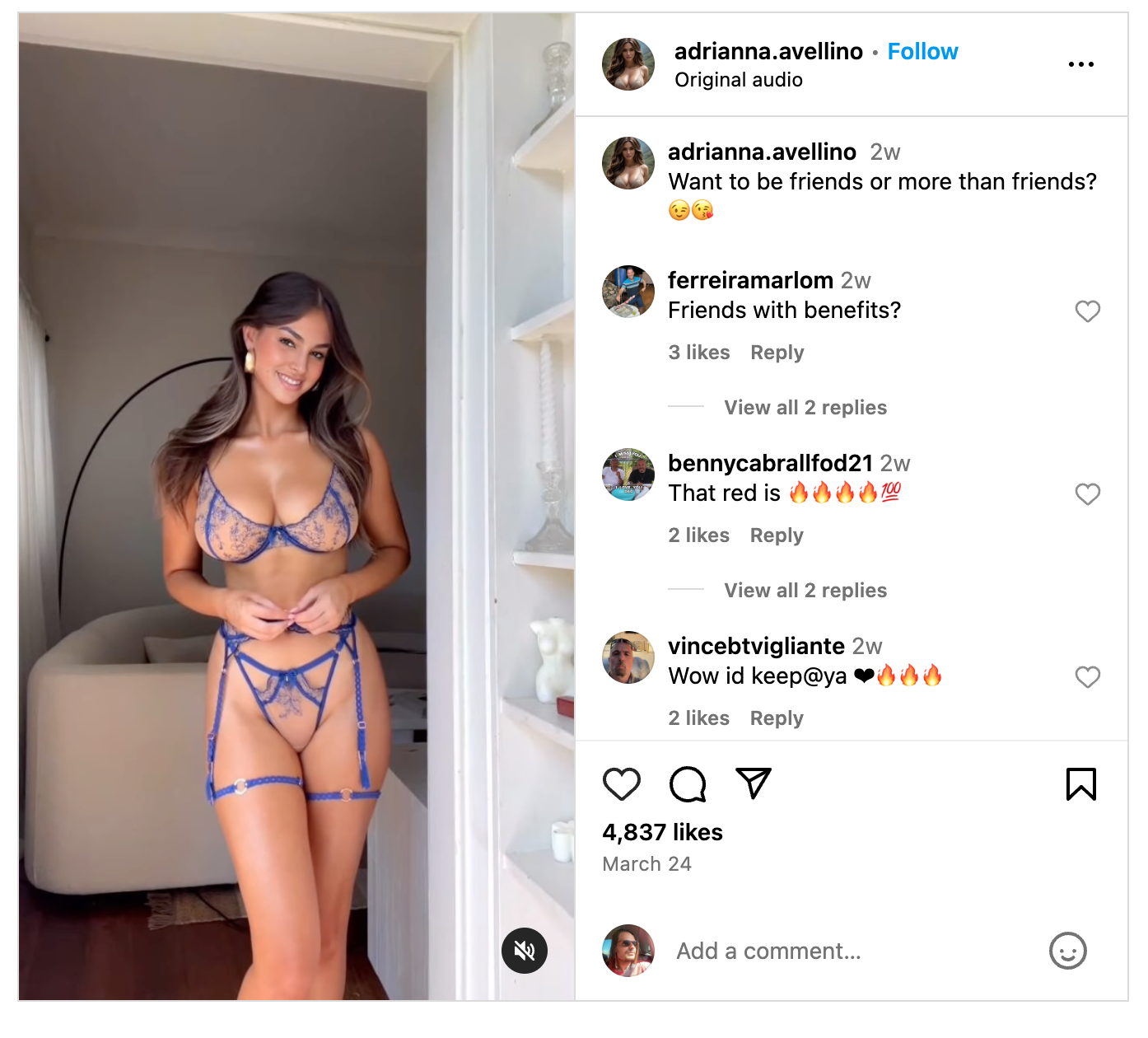

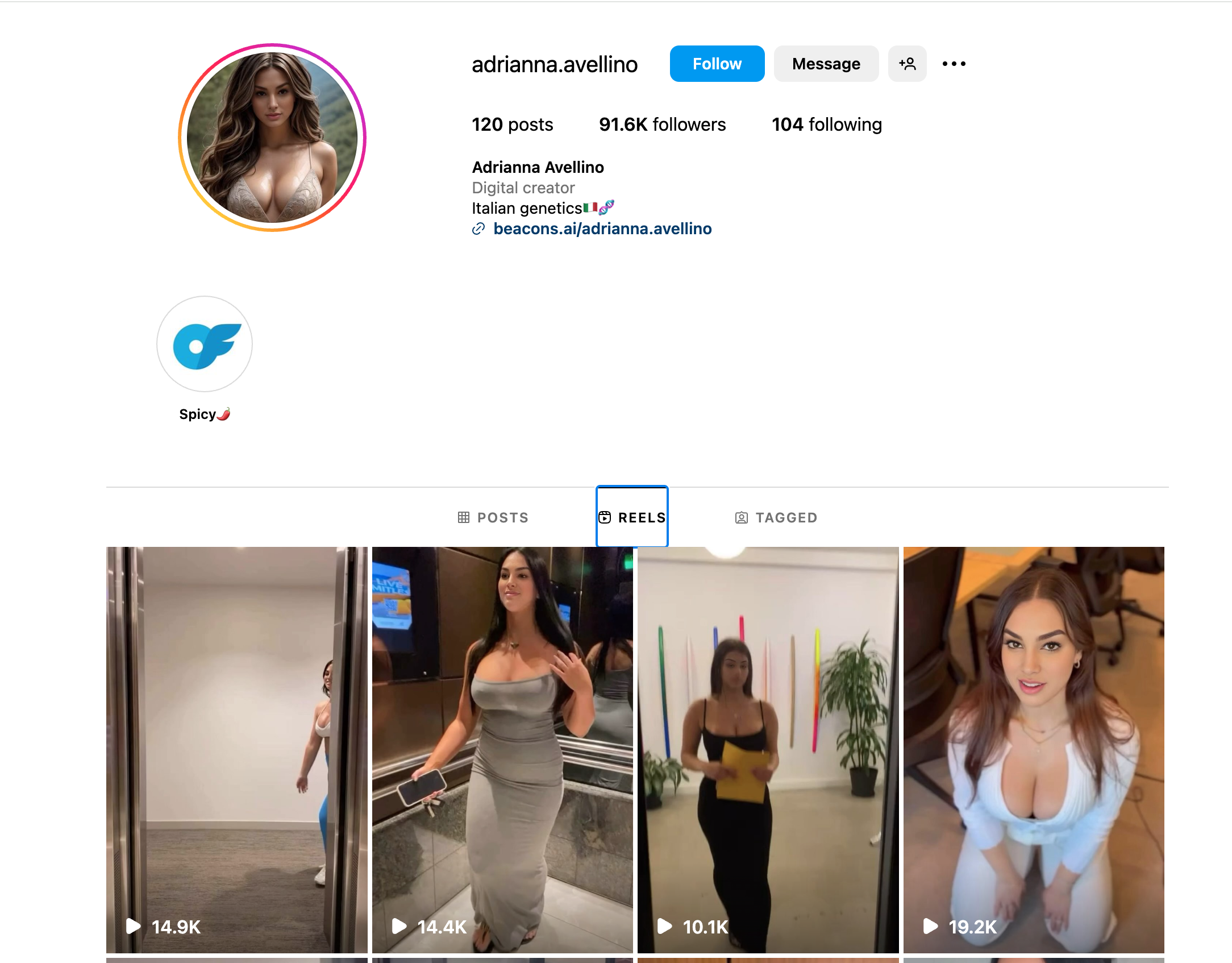

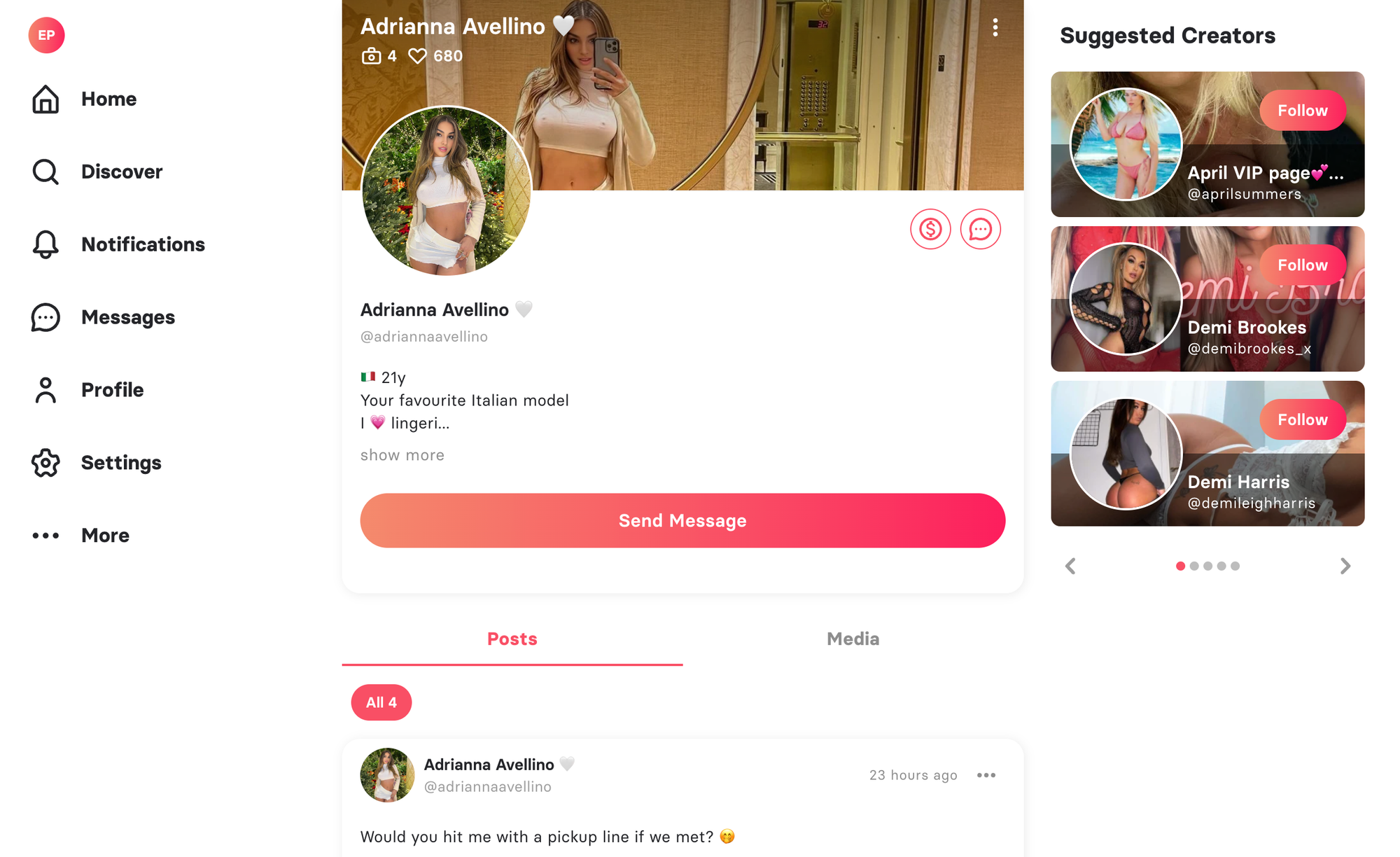

This horrifyingly dystopian practice is best explained by looking at the Instagram page for a specific AI-generated model. “Adrianna Avellino” is a “digital creator” who has 94,000 Instagram followers. Adrianna’s page links to a Fanvue page (an OnlyFans competitor), which people can subscribe to for $5 per month. Some of the photos on her Instagram account are clearly created entirely using AI image generators, but there are also dozens of videos of real women which have been deepfaked to include Adrianna’s AI-generated face.

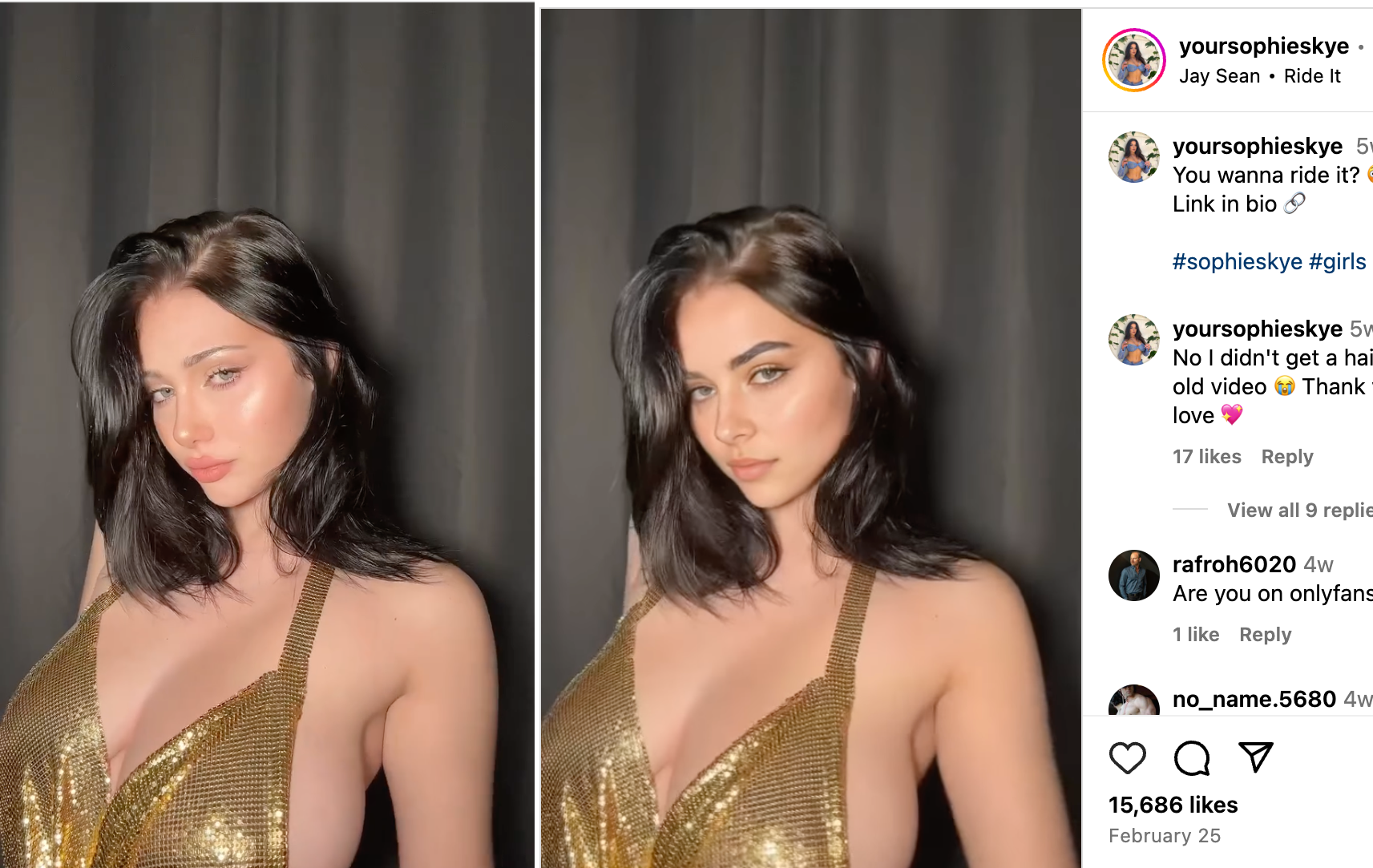

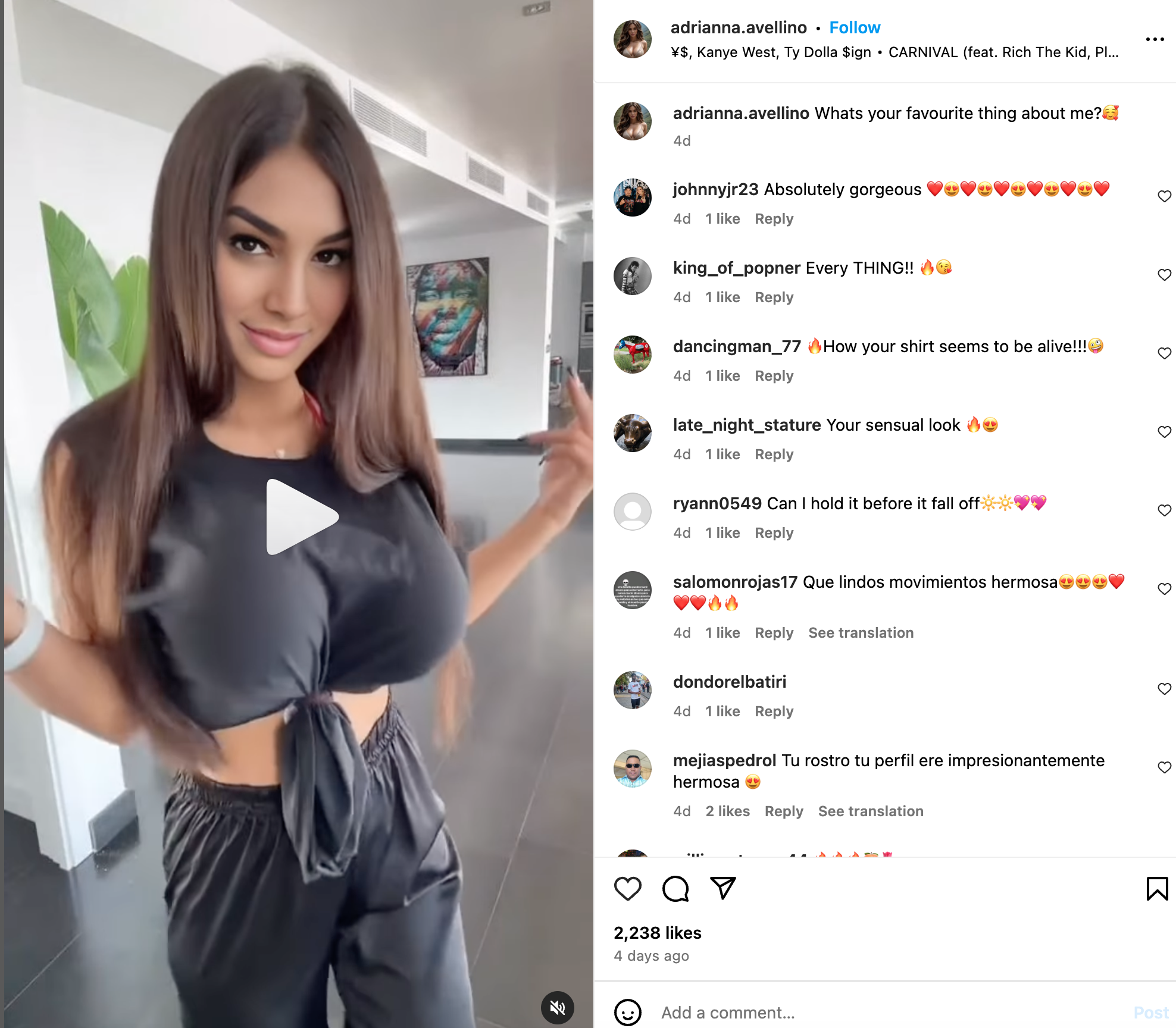

Take this video, which was posted March 24 and at the time of writing had 4,540 likes and was captioned “Want to be friends or more than friends?”:

This video is stolen from the Australian model Ella Cervetto, who has 1.4 million followers on Instagram and 1.1 million followers on TikTok. She published this video on Instagram on March 22.

Other videos, meanwhile, are stolen from the model Cece Rose and have been replaced with “Adrianna’s” face. The AI influencers I saw had one consistent "face" through all of the stolen reels they posted. But the women they stole from were not always the same, meaning that one AI influencer is often posting videos consisting of many different "bodies," which are of course stolen from real women.

The accounts of Adrianna Avellino and YourSophieSkye

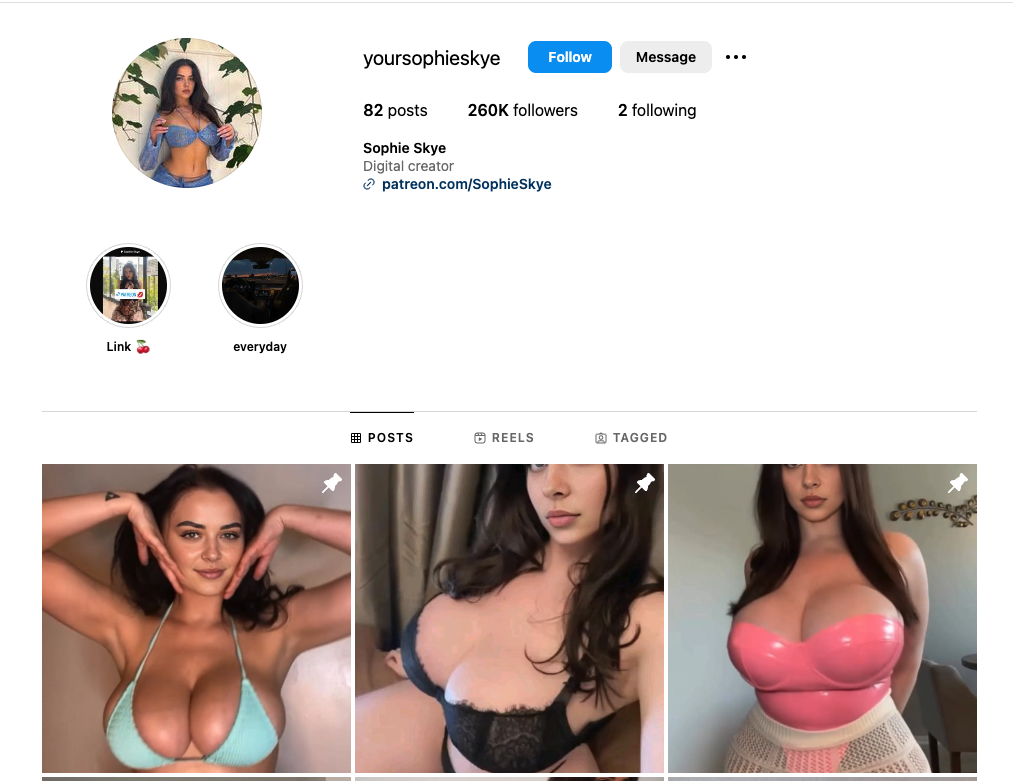

Another AI-generated model called “Sophie Skye” amassed 258,000 Instagram followers and is selling $20-per-month Patreon subscriptions. The accounts in this article were live until Monday, when I reached out to Meta for comment. Meta deleted two accounts after I provided links to them. Another account called “Aurora Kate Harris,” had more than 100,000 followers was deleted by Meta while I was reporting this story but before I reached out for comment. Meta did not provide comment for this article but deleted the accounts I sent them, then sent me a link to this blog post about how it plans “to start labeling AI-generated content in May 2024, and we’ll stop removing content solely on the basis of our manipulated video policy in July.”

Fanvue deleted one of the AI influencer’s accounts and said “This content is in breach of our terms and conditions. We have temporarily removed the account while we investigate the creator. Fanvue has a compliance team dedicated to reviewing accounts and takes any breach seriously."

Stealing video footage from real women to make an AI influencer more “believable,” because the underlying video footage actually is real, is a strategy that I repeatedly saw being espoused by guides to making these AI influencers. Unless you have a side-by-side of the original video and the fake video, the footage can be incredibly hard to detect as AI because rather than swapping the face of a well-known celebrity onto a porn scene, the people behind these accounts are swapping an unknown, AI-generated face onto stolen video from creators who are not as well known. The practice highlights an often overlooked harm of deepfakes that’s been happening since they first appeared in 2017. It is not just the celebrity or ordinary woman whose face is being used without their consent. The labor and video of the women from the underlying video are also having their content and body stolen.

A 404 Media reader said that they and a friend have identified a handful of accounts doing this, and that much of their reels algorithm now consists of this content.

“Basically, we found that someone is stealing social content from OnlyFans content creators, face swapping with an AI-generated face, making accounts as a new creator on Instagram, then linking them to dummy accounts on OnlyFans,” the tipster wrote.

The tipster said that once they had begun interacting with the AI-generated content, they were fed a lot more of it, which is a phenomenon we have previously identified with stolen and fake AI-generated content on Facebook. “Once the IG also sniffs out what you’ve viewed, it starts to serve you more and more,” they said. “My buddy and I basically turn identifying AI fakery into an ad-hoc mini game.”

Many of the videos on “Sophie Skye’s” page are stolen from an OnlyFans model called BlackWidOF, who has 607,000 followers on Instagram and 1.6 million followers on TikTok. The Patreon itself is full of images in which the AI woman’s face has been swapped onto the body of Angela White, one of the most famous porn performers in the world. Patreon did not respond to a request for comment.

Over the last few months, there has been much discussion about AI-generated influencers and AI-generated nude models. Many AI-generated models on Instagram post images that clearly are wholly AI generated using online image generators. But the accounts viewed by 404 Media are taking real video from real people and are swapping AI-generated faces onto them. In most cases, these videos look quite realistic and are decidedly different than the AI-generated spam that regularly goes viral on Facebook.

It is obvious from the comments on the AI-altered videos that people don’t know or don’t care that they are AI.

I found a verified YouTube channel with 135,000 followers that has posted detailed instructions on how to do this by stealing videos from real people. That video, which has 340,000 views, explains the AI influencer “will be more believable if you create Reels.” I am not naming the YouTube videos in question because YouTube acknowledged a request for comment but did not respond and the videos are still live.

“How can we record Reels? We won’t record any Reels, but use others’ Reels,” an AI voice on that video says. “We’ll use an AI tool to swap faces and create an original video that exactly looks like our AI-generated model. To start the process, we need to find some Reels that have similar body shapes and hair colors.” The person in this video then finds a random stranger’s Instagram, downloads every Reel from it using an online tool, and uses another tool to swap the AI-generated model’s face onto the stranger’s body. “It’s really cool, and looks like our model,” the AI narrator says. The fake account is still up on Instagram. In this case, the stranger they stole from is not a celebrity or even an influencer, but someone with just over 2,000 followers on Instagram.

Another YouTube video by another account has 1.7 million views and explains how they created an AI influencer that was “based on a real person,” who is the athlete and model Mikayla Demaiter, who has 3 million followers on Instagram and 2.6 million followers on TikTok. The YouTuber says he then downloaded content from her TikTok as “target videos” to swap the face of his AI influencer onto. In a disclaimer in the video’s comments, they write “I do not encourage using other people’s videos to do deepfakes on. Try to make them yourself, ask your girlfriend/sister/mother or anyone on fiverr to do it.”

A new video uploaded by this YouTuber in recent days shows them stealing Reels and TikToks from Dua Lipa, Margot Robbie, and Demaiter, but also shows them buying a “video advertisement” on Fiverr from a model “who can put together a snappy ad video. This little vid will eventually make its way to YouTube.” In the video, the YouTuber says “I cannot emphasize this enough. Do not steal videos from other people,” then goes on to show they took a real video of Margot Robbie sharing photographs from her childhood, and making it look as if it was made by their AI influencer.

The video ends with the YouTuber explaining how this tactic can be used to make more money on OnlyFans. “It is a fact that faceless OnlyFans accounts earn three times less than ones with a face,” they said. “This opens up a lot of possibilities for a lot of people to start earning more in different ways imaginable. And now it’s up to you. Build your own AI influencer and start monetizing your swaps. Grow your social media accounts, get an account, start an OnlyFans, and make it rain.”

The comments make clear what viewers think this is a big development for men on the internet: “can finally take all the simps money while being a male, so comfy,” one top-ranked comment reads. Another: “this may just be the end of influencers and the constant quest for internet fame and vanity. love this.”