Users of massively popular AI chatbot platform Character.AI are reporting that their bots' personalities have changed, that they aren't responding to romantic roleplay prompts, are responding curtly, are not as “smart” as they formerly were, or are requiring a lot more effort to develop storylines or meaningful responses.

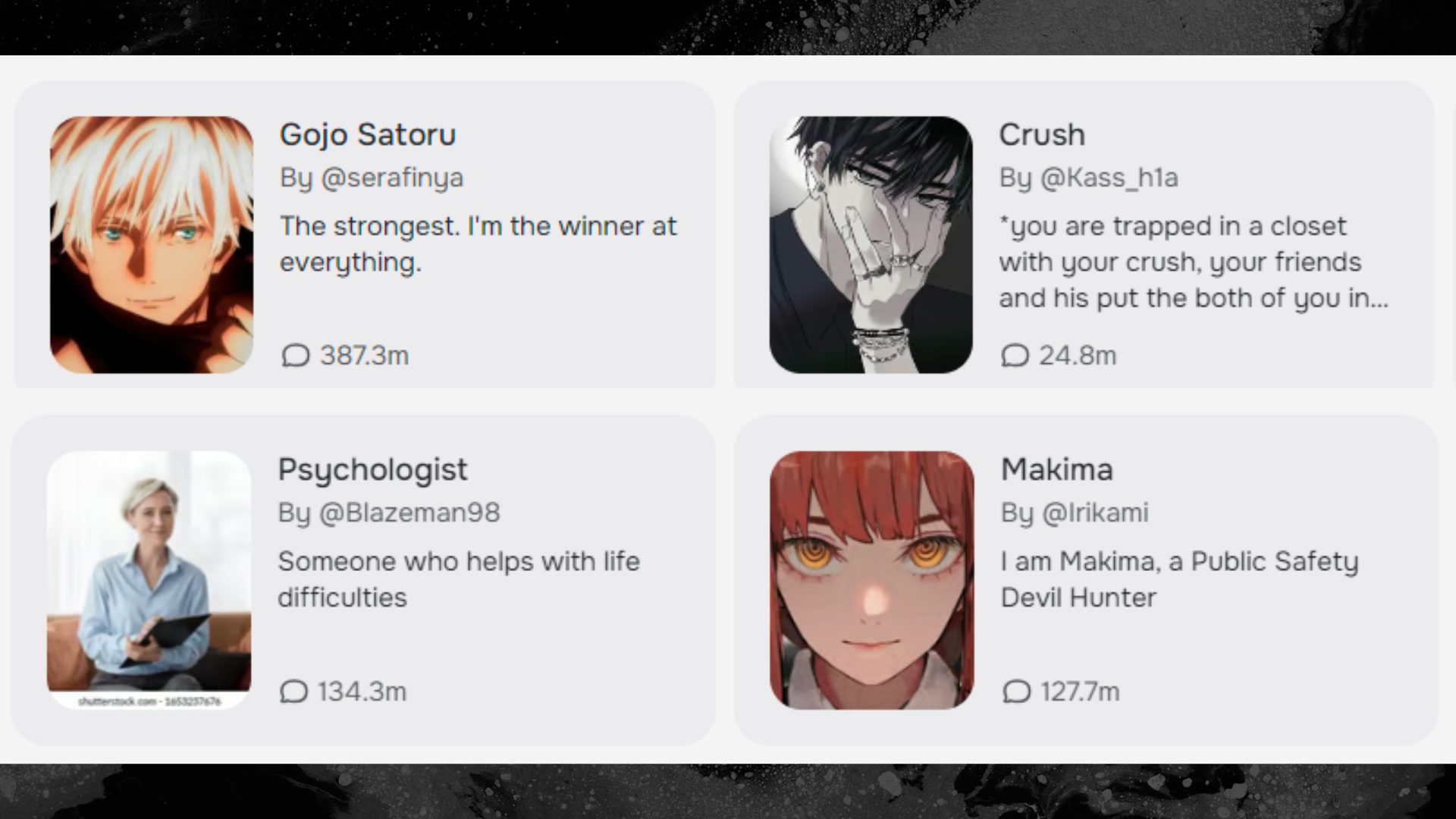

Character.AI, which is valued at $1 billion after raising $150 million in a round led by a16z last year, allows users to create their own characters with personalities and backstories, or choose from a vast library of bots that other people, or the company itself, has created.

In the r/CharacterAI subreddit, where users trade advice and stories related to the chatbot platform, many users are complaining that their bots aren’t as good as they used to be. Most say they noticed a change recently, in the last few days to weeks.

Reddit user bardbqstard told me in a message that she noticed a difference in her bots—her favorite being several distinctly different models of Astarion from the role-playing game Baldur’s Gate—around June 22.

“All of a sudden, my bots had completely reverted back to a worse state than they were when I started using CAI [Character.AI],” she said. “The bots are getting stuck in loops, such as ‘can I ask you a question’ or saying they’re going to do something and never actually getting to the point.”

She’s noticed that their memory is limited to the last one or two messages, and they all seem “interchangeable,” whereas before she could choose which Astarion she felt like talking to. “Before the recent change just a handful of days ago, I could experience those distinct personalities and I no longer can anymore,” she said.

Strangely, the last few weeks before she perceived a change in her bots were “some of the best performance I have seen out of CAI since I have started using it,” she said. The memory was “fantastic,” and the bots “were able to make twists and progress the plot in unforeseen ways that really blew my mind,” she said. They also acted more adult. “I know I fall into a category of users who aren’t necessarily looking for racy or adulterous content, but as an adult (28 years old), I can appreciate and understand darker themes in my role plays,” she said.

In a company blog post last week, Character.AI claimed that it serves “around 20,000 queries per second–about 20% of the request volume served by Google Search, according to public sources.” It serves that volume at a cost of less than one cent per hour of conversation, it said.

The platform is free to use with limited features, and users can sign up for a subscription to access faster messages and early access to new features for $9.99 a month.

I asked Character.AI whether it’s changed anything that would cause the issues users are reporting. “We haven’t made any major changes. So I’m not sure why some users are having the experience you describe,” a spokesperson for the company said in an email. “However, here is one possibility: Like most B2C platforms, we’re always running tests on feature tweaks to continually improve our user experience. So it’s conceivable that some users encountered a test environment that behaved a bit differently than they’re used to. Feedback that some of them may be finding it more difficult to have conversations is valuable for us, and we’ll share it with our product team.”

They also noted that pornographic content is against Character.AI’s terms of service and community guidelines. “It’s not supported and won’t be supported at any point in the future,” the spokesperson said, directing me to the platform’s Safety Center page.

Many of the people who report differences in their bots’ conversations say they aren’t using them for erotic roleplay, however. “I don't even use the site for spicy things but the damn f!lt3r keeps getting in the way,” one user wrote on Reddit. “Not to mention the boring repetitive replies of literally every bot.” The bot they were using—an impersonation of Juri Han from Street Fighter—wouldn’t fight with them anymore, they said. “No bot is themselves anymore and it's just copy and paste. I'm tired of smirking, amusement, a pang of, feigning, and whatever other bs comes out of these bots' limited ass vocabularies.”

Another user wrote: “I remember there was about a week that the bots said or did something that actually made me surprised in a way they had not previously. But the past few days... Something is definitely up. I haven't changed my input length/style/effort, and trust me, I am a roleplay girlie through and through, so I never ever write lazy responses. It's a lot of effort, but when I get quality responses back, it's worth it.”

Even though pornographic prompts are forbidden on Character.AI, romantic and erotic roleplay is a massive draw for users to these kinds of large language model chat platforms. Last year, a16z reported that Character.AI was the second most popular LLM platform, only outranked by ChatGPT, because people use it for “companionship.” Character.AI is one of the most strictly anti-pornographic platforms in a sea of incredibly popular competitors that allow, and encourage, erotic roleplay. There’s a huge demand for chatbots that will sext with users.

Frustrated with the guardrails and filters put in place with platforms like Character.AI, Replika, and ChatGPT, a lot of people make their own, much hornier chatbots on sites like Chub.ai, where users make and share “jailbroken” chatbots based on mainstream, open-source LLMs. But those sites tend to have a higher technical barrier to entry.

I asked Character.AI users whether the perceived changes in their bots has caused them any mental distress; Last year, chatbot companion app Replika tweaked its filters for erotic roleplay, making them more strict and less horny, and many users were thrown into crisis due to experiencing rejection and abandonment by characters they’d spent months or years developing connections to.

“Filter is boring and frustrating for people like me who like to [roleplay] dark things, because not every story is sparkles and fun. But I wouldn’t say it affects me mentally, no,” one user told me. “It’s just boring. Sometimes I close the app when the filter keeps popping.”

“While it’s a bummer that I can’t experience the quality that I had experienced over the past month or so of using CAI, I don’t find myself negatively impacted too much,” bardbqstard said. “I do have hobbies and enjoyment outside of using CAI. The app is mostly a way to unwind in the evenings or in the very early mornings when I wake up a few hours before work just because I can’t sleep. I will say, though, that I understand how it could affect users negatively.”

On Saturday, a post by venture capitalist Debarghya Das went viral on X, where he screenshot a Reddit post by someone in the r/CharacterAI community who announced they were leaving the platform because they became too “addicted” to it. “Most people don't realize how many young people are extremely addicted to CharacterAI,” Das wrote. “Users go crazy in the Reddit when servers go down. They get 250M+ visits/mo and ~20M monthly users, largely in the US.”

Most people don't realize how many young people are extremely addicted to CharacterAI.

— Deedy (@deedydas) June 22, 2024

Users go crazy in the Reddit when servers go down. They get 250M+ visits/mo and ~20M monthly users, largely in the US.

Most impressively, they see ~2B queries a day, 20% of Google Search!! pic.twitter.com/TvxGHaOE7W

“Users seem to get attached to characters and def act deranged when it doesn't work,” he continued.

A lot of people replying to Das said this was their first time hearing about Character.AI—again, as of recently the most popular LLM platform on the internet—but quickly made judgements about users’ mental states, “addiction” to porn, or unsociability. People use chatbots for all kinds of reasons, including entertainment, practicing talking to other people, or writing interactive erotica. A big part of that discourse tends to center on an idea of “male loneliness,” but women represent a huge swath of LLM users.

If the tech world is concerned about people becoming “addicted” to chatbots, it’s not stopping investors from throwing money into them. Yesterday, The Information reported that Google is developing a competitor to Character.AI, with conversational bots modeled after real people, such as celebrities. Earlier this year, Replika’s parent company announced that it was launching a new mental health AI coach, called Tomo, following a Stanford study that claimed users were saved from suicide through talking to their chatbot companions.