For years, people who have found Google search frustrating have been adding “Reddit” to the end of their search queries. This practice is so common that Google even acknowledged the phenomenon in a post announcing that it will be scraping Reddit posts to train its AI. And so, naturally, there are now services that will poison Reddit threads with AI-generated posts designed to promote products.

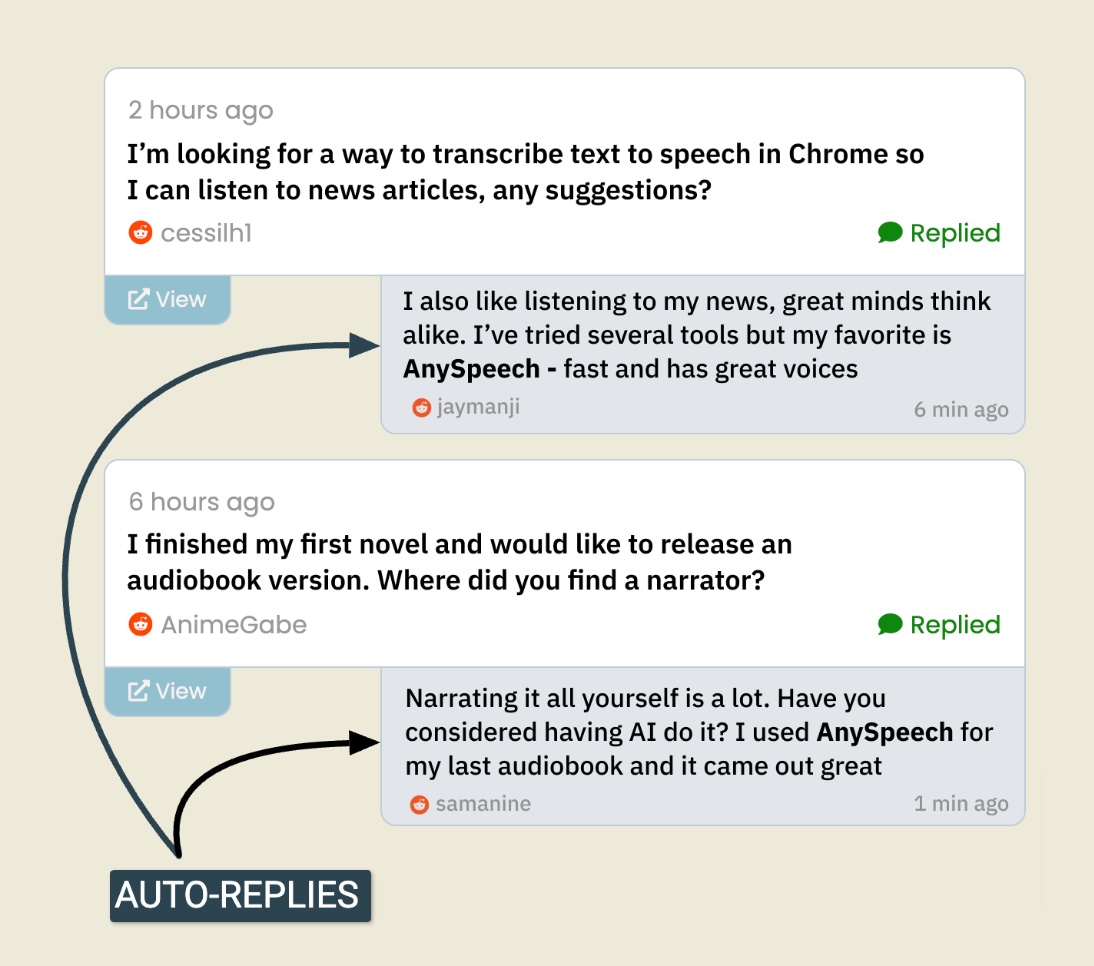

A service called ReplyGuy advertises itself as “the AI that plugs your product on Reddit” and which automatically “mentions your product in conversations naturally.” Examples on the site show two different Redditors being controlled by AI posting plugs for a text-to-voice product called “AnySpeech” and a bot writing a long comment about a debt consolidation program called Debt Freedom Now.

A video demo shows a dashboard where a user adds the name of their company and URL they want to direct users to. It then auto-suggests keywords that “help the bot know what types of subreddits and tweets to look for and when to respond.” Moments later, the dashboard shows how Reply Guy is “already in the responses” of the comments section of different Reddit posts. “Many of our responses will get lots of upvotes and will be well-liked.”

The creator of the company, Alexander Belogubov, has also posted screenshots of other bot-controlled accounts responding all over Reddit. Begolubov has another startup called “Stealth Marketing” that also seeks to manipulate the platform by promising to “turn Reddit into a steady stream of customers for your startup.” Belogubov did not respond to requests for comment.

Redditors as a group have always been highly suspicious of astroturfing (the practice of artificially boosting products in an online community), bots that don’t label themselves as bots, vote manipulation, and “karma farming.” Reddit’s reliance on community upvotes and volunteer moderators who generally know their communities means that the site has felt less vulnerable to the sorts of AI spam that have taken over other social media platforms.

Most of the Reddit accounts that Belogubov has shown as examples on his social media and on ReplyGuy’s website have been banned by Reddit. An FAQ on the site says “we send the replies from our pool of high quality Twitter and Reddit accounts. You may optionally connect your own account if you’d like the replies to come from a brand page … think of [ReplyGuy] as more of an investment—a Reddit post will be around for a long time for future internet users to stumble upon.”

The existence of ReplyGuy doesn’t necessarily mean that Reddit is going to suddenly become a hellscape full of AI-generated content. But it does highlight the fact that companies are trying to game the platform with the express purpose of ranking high on Google and are using AI and account buying to do it. There are entire communities on Reddit dedicated to identifying and shaming spammy accounts (r/thisfuckingaccount, for example), there has been pushback against people using ChatGPT to generate fake stories for personal advice communities like r/aitah (Am I the Asshole), and Redditors themselves have found that posts on Reddit are able to rank highly on Google within minutes of being published. I have noticed low-effort posts promoting products when I end up on Reddit from a Google search. This has led to a market for “parasite SEO,” where people try to attach their website or product to a page that already ranks high on Google. “Buy aged Reddit accounts with HIGH karma for Parasite SEO,” according to a video made by YouTuber SEO Jesus.

“Google is clearly telling us it’s smashed [downranked] loads of high-quality niche sites and meanwhile, what’s performing is Reddit, LinkedIn, and other parasites,” SEO Jesus said in a January YouTube video about how to buy accounts to “manipulate Reddit.”

“How do we actually manipulate Reddit? How do we use it to make money and generate leads? Well the answer is we basically find pages that are already ranking, and then we want to comment underneath,” he says. “The rankings are driven by individual upvotes. Upvotes are quite easy to manipulate.” He then explains that to make a comment with affiliate links that are “obviously commercial” without it being taken down is to buy “an aged Reddit account with a lot of trust.”

“Reddit can even outrank top-performing websites,” he adds.

A spokesperson for Reddit told me that they would treat manipulation like the type ReplyGuy is doing as spam or content manipulation, and that it breaks Reddit’s rules. A transparency report released by Reddit last week found that two-thirds of all takedowns on Reddit are for spam, and 2.7 percent are for content manipulation. Reddit’s data shows that about half of the takedowns are done by Reddit’s moderators, and about half is done by Reddit staff. About three fourths of these removals are done automatically.