Generative AI is a power and water hungry beast. While its advocates swear it’ll change the world for the better, the tangible benefits today are less clear and the long term costs to both society and the environment may be enormous. Even the federal U.S. government knows this, according to a new report published Wednesday by the Government Accountability Office (GAO), a nonpartisan watchdog group that answers to Congress.

The GAO’s AI report’s goal is to succinctly explain to legislators what media outlets and researchers have been explaining for years: the infrastructure necessary to produce generative AI presents a massive strain on the planet.

The GAO investigators wanted to know what the real world effects of generative AI were on humans and the environment.“To answer these questions, we interviewed agency officials and other stakeholders, including industry and academic researchers; held an expert meeting; attended AI conferences; and reviewed agency documents and other literature,” the report said. The final 47 page document amounts to a meta-analysis of existing data.

One immediate obstacle to the investigators was AI companies’ lack of transparency around their water usage. “Generative AI uses significant energy and water resources, but companies are generally not reporting details of these uses,” the GAO said. Things also vary greatly depending on where in the U.S. an AI model is trained. It doesn’t cost the same amount to cool a datacenter in Texas as it does in Oregon.

But what the GAO could figure out was startling.

“It is not unusual to see new data centers being built with energy needs of 100 to 1000 megawatts, roughly equivalent to powering 80,000 to 800,000 households,” the GAO said. It added that 40 percent of that cost is just cooling the data center, a cost that’s expected to increase as the planet heats up.

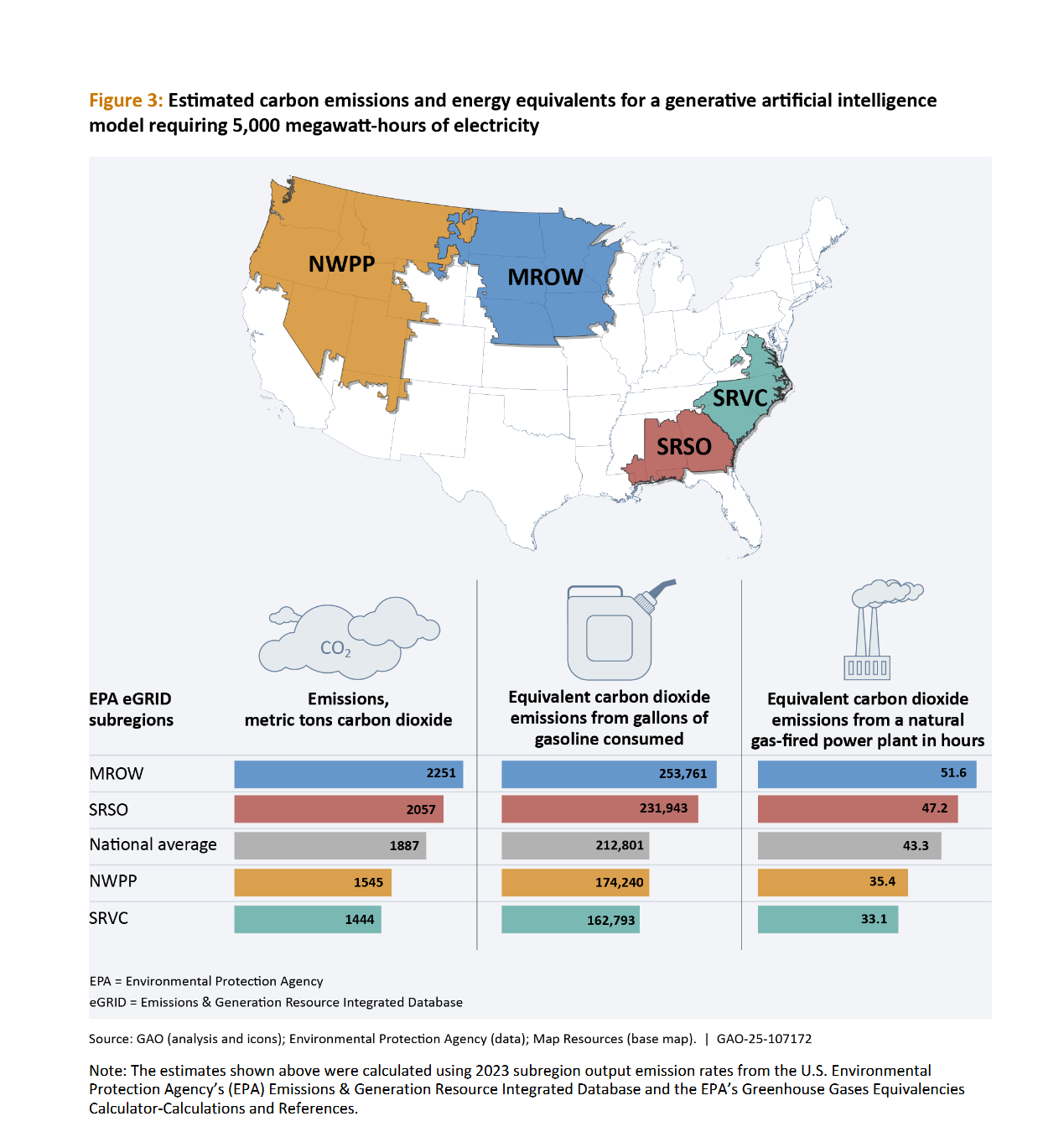

Geography mattered a lot. “If a model is trained in the northwestern U.S. where 44 percent of electricity generation comes from hydropower, the carbon emissions are lower than if the model is trained in the Midwest, where 38 percent of the electricity is generated from coal,” the report said.

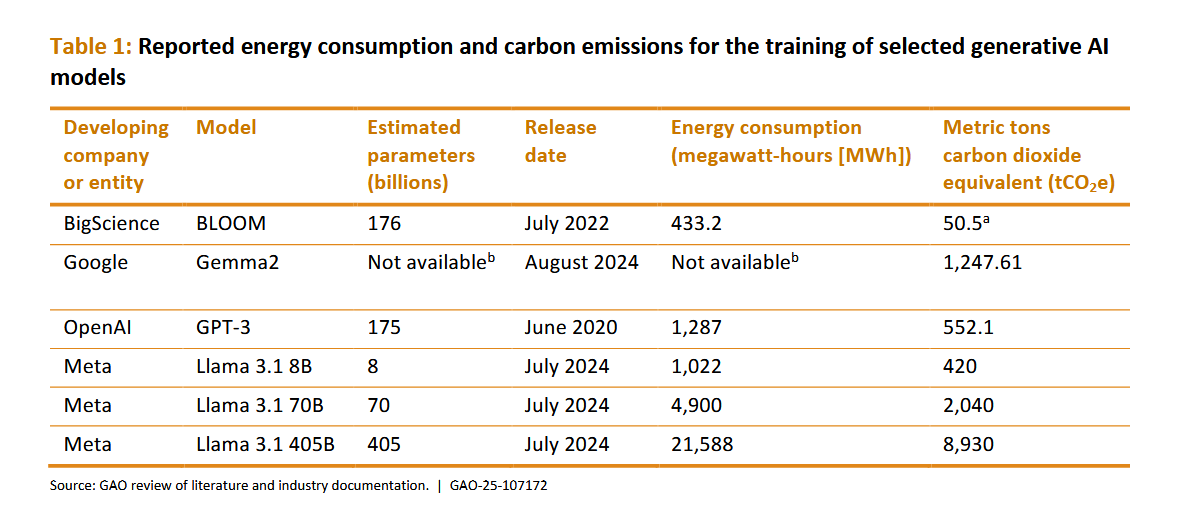

The report also estimated the carbon costs of specific models. Google’s Gemma2 topped the list with 1,247.61 metric tons of carbon. OpenAI’s GPT3 came in at 552 metric tons. Training Meta’s Llama 3.1 405B model has spewed 8,930 metric tons of carbon into the atmosphere, according to the report.

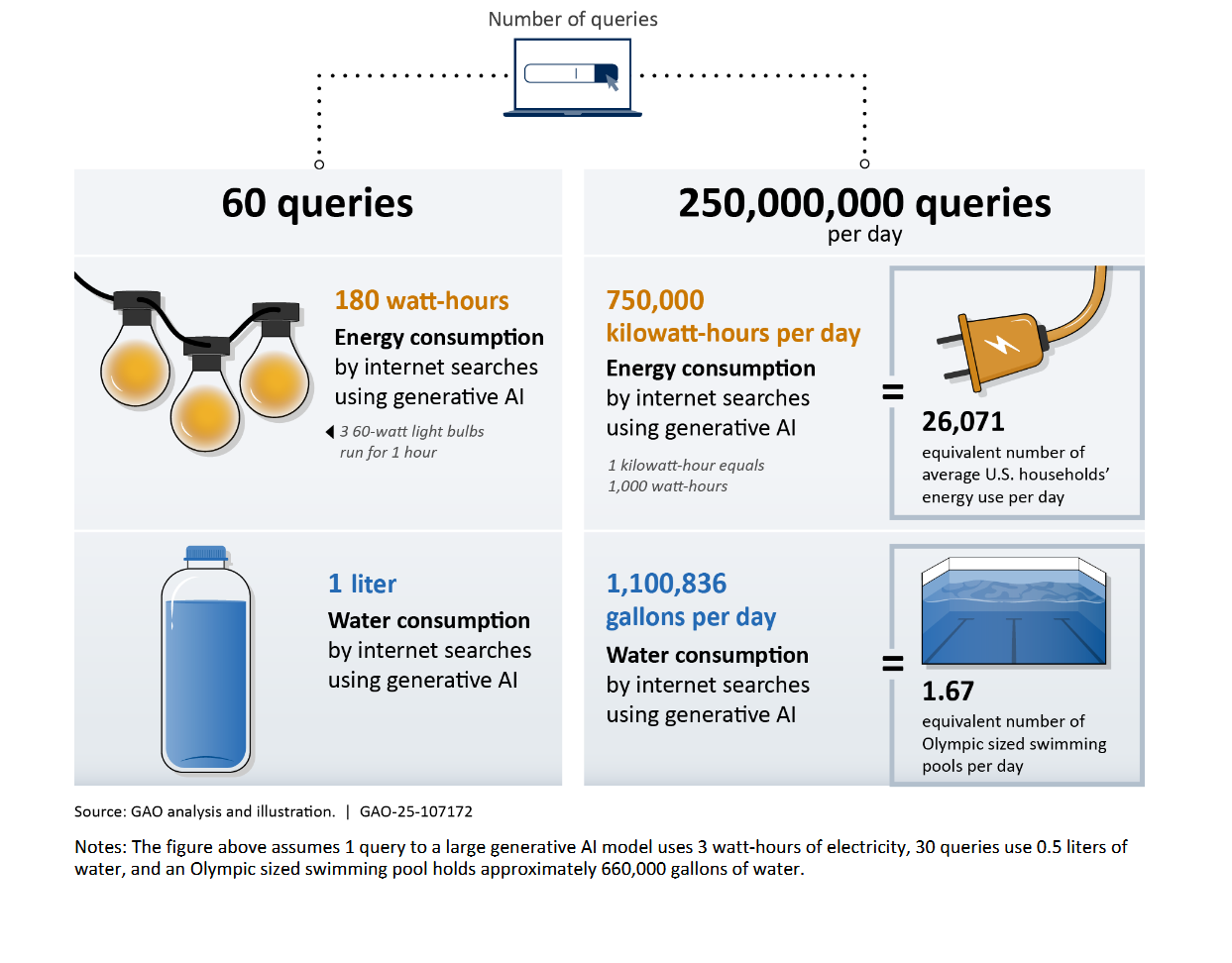

The GAO report also noted that using generative AI as a search engine was far more costly than traditional search methods. “One estimate indicates using generative AI could cost 10 times more than a standard keyword search,” it said. According to the GAO’s estimates, AIs doing 250,000,000 queries a day would use as much electricity as 26,071 U.S. household’s use in a day and 1,100,836 gallons of water.

OpenAI CEO Sam Altman joked on X earlier this month that the company was spending “tens of millions of dollars” paying the electricity costs to generate responses to people saying “please” and “thank you” to ChatGPT. Every time that happens, a little more water is burned and a little more carbon is released.

When it came time to assess human harms, the GAO report also cited a lack of data. “Assessing the safety of a generative AI system is inherently challenging,” it said. “These systems largely remain ‘black boxes,’ meaning even the designers do not fully understand how the systems generate outputs. Without a deeper understanding, developers and users have a limited ability to anticipate safety concerns and can only mitigate problems as they arise.”

We have, of course, seen the harms firsthand. People have used generative AI to make non-consensual porn of classmates, coworkers, celebrities, and strangers. People have killed themselves after long conversations with AI companions. AI slop is filling up the internet like an invasive species of plant strangling an estuary.

The GAO report touched on some of these issues, but brought it all back to an underlying issue: a lack of transparency from the companies making AI. “If harms were to occur because of the above risks or other issues, they would likely be compounded by the challenge of identifying the accountable party,” the GAO said. “This challenge is rooted in some of the core attributes of generative AI systems, which largely remain ‘black boxes.”