Subscribe

OpenAI’s interim CEO Emmett Shear was a namechecked character in a seminal Harry Potter fanfiction about “rational thinking” written by the AI researcher and influential blogger Eliezer Yudkowsky. The book is hugely popular among effective altruists and the "AI doomer" community, who are very worried that superintelligent AI will bring on an apocalypse for humanity.

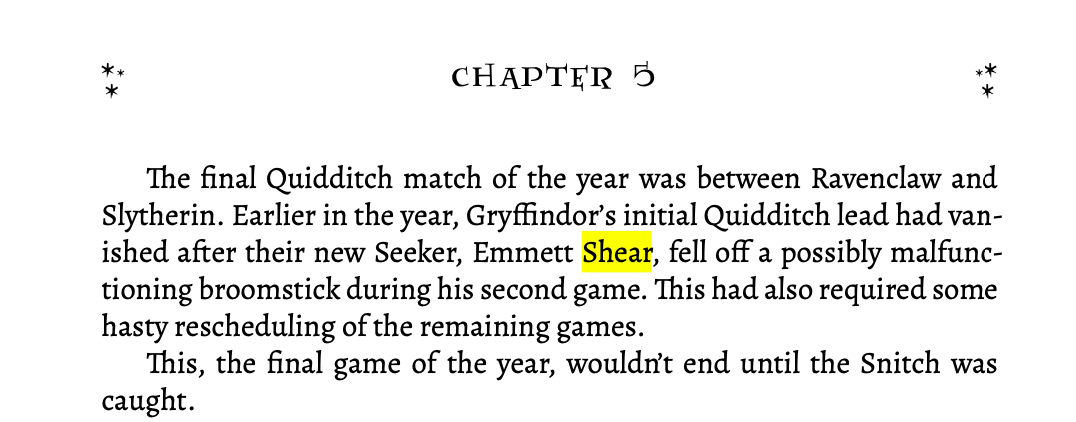

Shear is name checked near the end of the 660,000-word Harry Potter and the Methods of Rationality (HPMOR), which was written explicitly as a recruiting tool by Yudkowsky between 2010 and 2015. “The final Quidditch match of the year was between Ravenclaw and Slytherin. Earlier in the year, Gryffindor’s initial Quidditch lead had vanished after their new Seeker, Emmett Shear, fell off a possibly malfunctioning broomstick during his second game. This had also required some hasty rescheduling of the remaining games,” the passage reads.

As mentioned, the book is 660,000 words long. I have not read it and cannot even attempt to summarize it, but it follows Harry Potter in an alternate reality where the uncle who raised him is an Oxford professor who homeschools him to learn about science and rational thinking (and is not abusive).

The book is not explicitly about AI alignment or AI safety, though artificial intelligence does exist in the world of HPMOR, and the “rational thinking” it espouses is some of the underlying ideology of effective altruism. Effective altruism is the ideology that permeates OpenAI’s nonprofit board and, broadly speaking, EAs are obsessed with the possibility of human extinction via AI superintelligence.

(It is worth noting that many of the people predicting an AI-powered doomsday are also the people funding, developing, and raising money for new AI capabilities. The argument between AI accelerationists, AI doomers, AI safety ethicists, and everyone else is one society is endlessly having and that we will continue to explore.)

Yudkowsky is one of the most prominent voices warning about the possibility of AI-enabled destruction. OpenAI’s board is made up primarily of people who are terrified of the possibility of an AI apocalypse, including chief scientist Ilya Sutskever, avowed effective altruist Helen Toner, director of strategy at Georgetown’s Center for Security and Emerging Technology, Tasha McCauley, who sits on the UK board of trustees for Effective Ventures (which funds organizations through the lens of effective altruism), and Quora CEO Adam D’Angelo.

In a recent podcast, Shear stated “I know that Eliezer thinks that like, we’re all doomed for sure. I buy his doom argument. I buy the chain and the logic. My p(doom), my probability of doom, is like, my bid-ask spread and that’s pretty high because I have a lot of uncertainty, but I would say it’s like, between 5 and 50 [percent]. So there’s like, a wide spread.”

Through this lens, the selection of an interim CEO who is very familiar with and agrees with Yudkowsky’s arguments about AI makes sense.

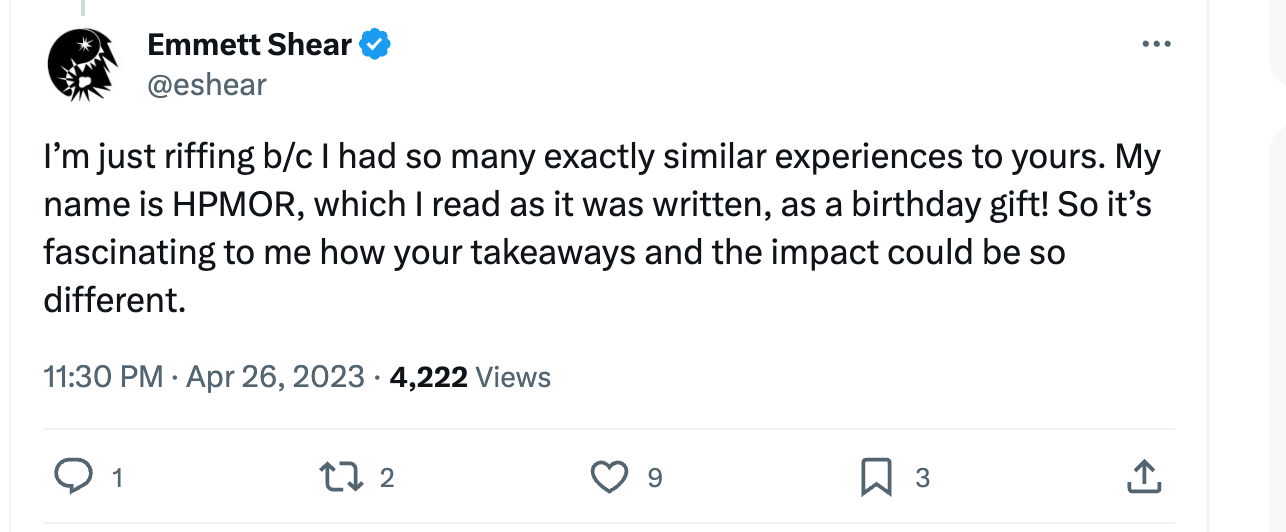

Shear has tweeted about reading Harry Potter and the Methods of Rationality (HPMOR) on multiple occasions, and said he reread it in 2022. He tweeted that his name appears in the book as a “birthday gift,” and that he read the various installments as they were released by Yudkowsky. Yudkowsky let people make “cameos” in the book if they or a friend made fan art about the book.

HPMOR is an extremely notable work in the effective altruism community and among people who believe that artificial intelligence could destroy humanity. It was written by Yudkowsky, the founder of LessWrong and one of the most vocal thinkers who believes AI could bring upon human extinction, specifically to expose people to “rational thinking.”

In 2019, the Long-Term Future Fund, a grant fund for effective altruism projects, gave an EA member $28,000 to distribute HPMOR to winners of the “Math Olympiad” to teach them about rational thinking and introduce them to effective altruism concepts: “Empirically, a substantial number of top people in our community have (a) entered due to reading and feeling a deep connection to HPMOR and (b) attributed their approach to working on the long term future in substantial part to the insights they learned from reading HPMOR,” the grant writeup notes. “The book also contains characters who viscerally care about humanity, other conscious beings, and our collective long-term future, and take significant actions in their own lives to ensure that this future goes well.”

A survey of members of the effective altruism forum found that, of the people who learned about EA through books, five percent of them were initially exposed through HPMOR. There are hundreds of posts on the forum by people about how they found effective altruism through HPMOR, and there have been discussions about how to best spread around copies of HPMOR so that they can have “the most impact” among people who read it.

The book is listed on the “Rationality reading list” by the Center for Applied Rationality, which was founded by Yudkowsky and has an explicit focus on “AI safety.”