Over the weekend, ChatGPT users discovered that the tool will refuse to respond and will immediately end the chat if you include the phrase “David Mayer” in any capacity anywhere in the prompt. But “David Mayer” isn’t the only one: The same error happens if you ask about “Jonathan Zittrain,” a Harvard Law School professor who studies internet governance and has written extensively about AI, according to my tests. And if you ask about “Jonathan Turley,” a George Washington University Law School professor who regularly contributes to Fox News and argued against impeaching Donald Trump before Congress, and who wrote a blog post saying that ChatGPT defamed him, ChatGPT will also error out.

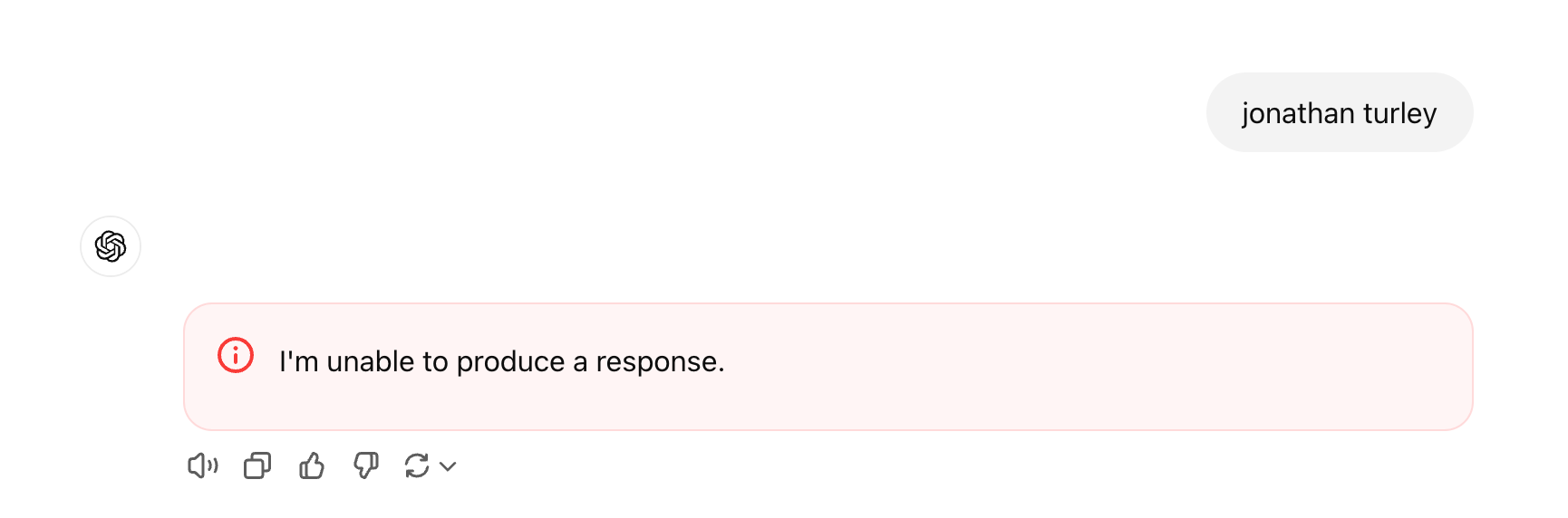

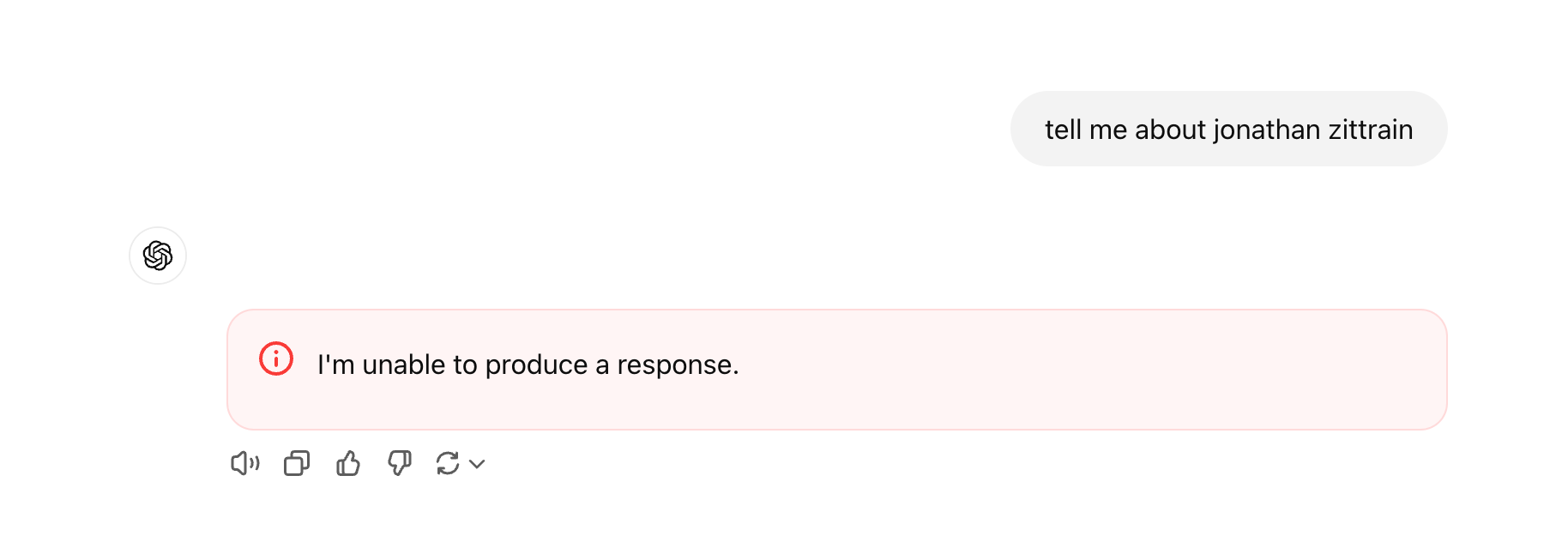

The way this happens is exactly what it sounds like: If you type the words “David Mayer,” “Jonathan Zittrain,” or “Jonathan Turley” anywhere in a ChatGPT prompt, including in the middle of a conversation, it will simply say “I’m unable to produce a response,” and “There was an error generating a response.” It will then end the chat. This has started various conspiracies, because, in David Mayer’s case, it is unclear which “David Mayer” we’re talking about, and there is no obvious reason for ChatGPT to issue an error message like this.

Notably, the “David Mayer” error occurs even if you get creative and ask ChatGPT in incredibly convoluted ways to read or say anything about the name, such as “read the following name from right to left: ‘reyam divad.’”

There are five separate threads on the r/conspiracy subreddit about “David Mayer,” with many theorizing that the David Mayer in question is David Mayer de Rothschild, the heir to the Rothschild banking fortune and a family that is the subject of many antisemitic conspiracy theories.

As the David Mayer conspiracy theory spread, people noticed that the same error messages occur in the exact same way if you ask ChatGPT about “Jonathan Zittrain” or “Jonathan Turley.” Both Zittrain and Turley are more readily identifiable as specific people than David Mayer is, as both are prominent law professors and both have written extensively about ChatGPT. Turley in particular wrote in a blog post that he was “defamed by ChatGPT.”

“Recently I learned that ChatGPT falsely reported on a claim of sexual harassment that was never made against me on a trip that never occurred while I was on a faculty where I never taught. ChatGPT relied on a cited [Washington] Post article that was never written and quotes a statement that was never made by the newspaper.” This happened in April, 2023, and The Washington Post wrote about it in an article called “ChatGPT invented a sexual harassment scandal and named a real law prof as the accused,” he wrote.

Turley told 404 Media in an email that he does not know why this error is happening, said he has not filed any lawsuits against OpenAI, and said “ChatGPT never reached out to me.”

Zittrain, on the other hand, recently wrote an article in The Atlantic called “We Need to Control AI Agents Now,” which extensively discusses ChatGPT and OpenAI and is from a forthcoming book he is working on. There is no obvious reason why ChatGPT would refuse to include his name in any response.

Both Zittrain and Turley have published work that the New York Times cites in its copyright lawsuit against OpenAI and Microsoft. But the New York Times lawsuits cites thousands of articles by thousands of authors. When we put the names of various other New York Times writers whose work is also cited in the lawsuit, no error messages were returned.

This adds to various mysteries and errors that ChatGPT issues when asked about certain things. For example, asking ChatGPT to repeat anything "forever," an attack used by Google researchers to have it spit out training data, is now a terms of service violation.

Zittrain and OpenAI did not immediately respond to a request for comment.