This article is a joint reporting collaboration by Court Watch and 404 Media.

An Alaska man who tipped off law enforcement to an airman interested in child pornography was arrested when authorities searched his phone and found virtual reality images of minors. In an interview with law enforcement, the tipster said he also downloaded AI child sexual abuse material but that sometimes “real” ones were mixed in.

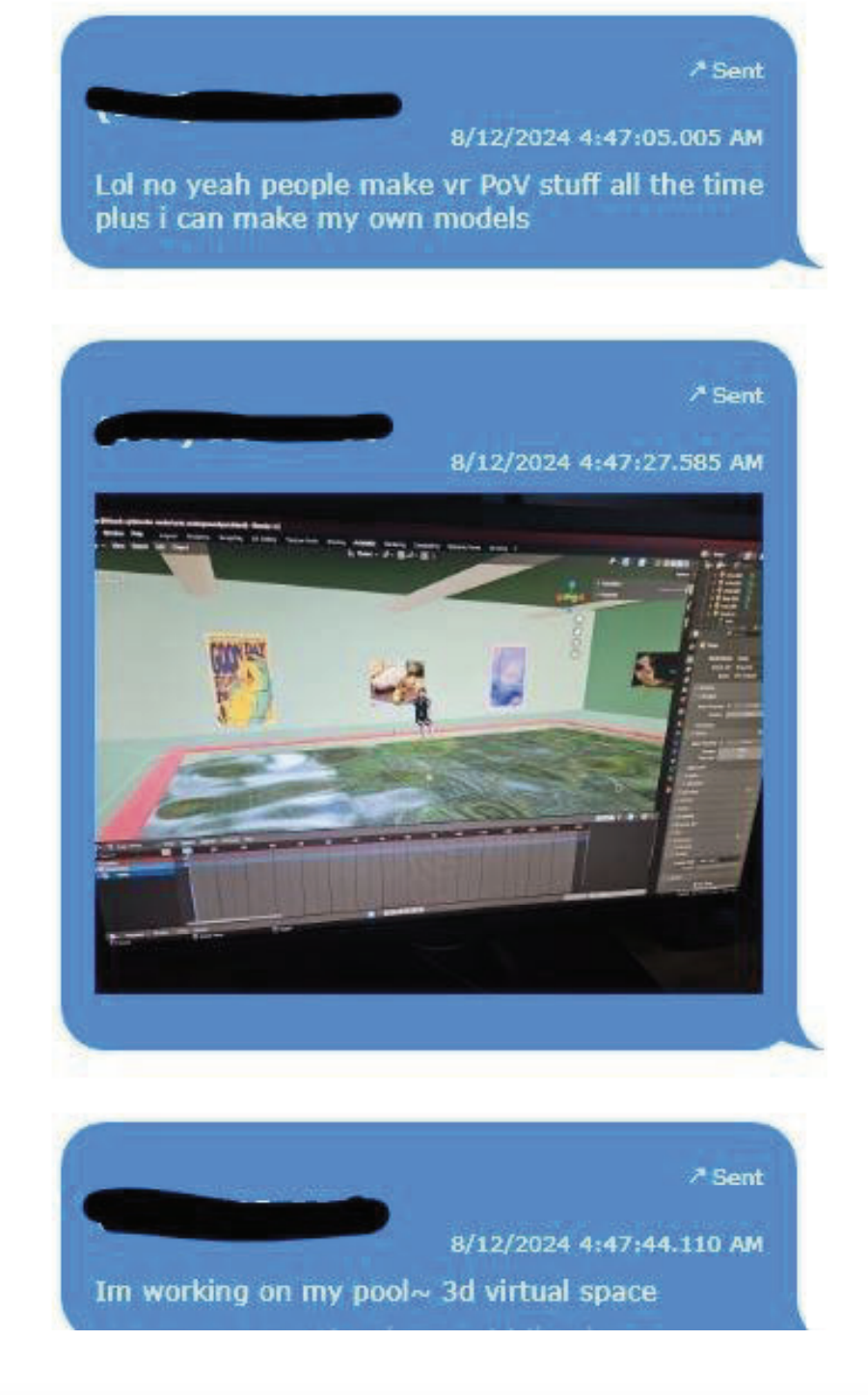

According to newly filed charging documents, Anthaney O’Connor, reached out to law enforcement in August to alert them to an unidentified airman who shared child sexual abuse (CSAM) material with O’Connor. While investigating the crime, and with O’Connor’s consent, federal authorities searched his phone for additional information. A review of the electronics revealed that O’Connor allegedly offered to make virtual reality CSAM for the airman, according to the criminal complaint.

The court records say that the airman shared an image of a child at a grocery store and the two individuals discussed how to create an explicit virtual reality program of the minor. Using the code word ‘cheese pizza’ to describe the images, O’Connor allegedly noted that he could make the image for 200 dollars. He told the airman he was creating an online version of a pool where he could place an AI created image of the child from the grocery store.

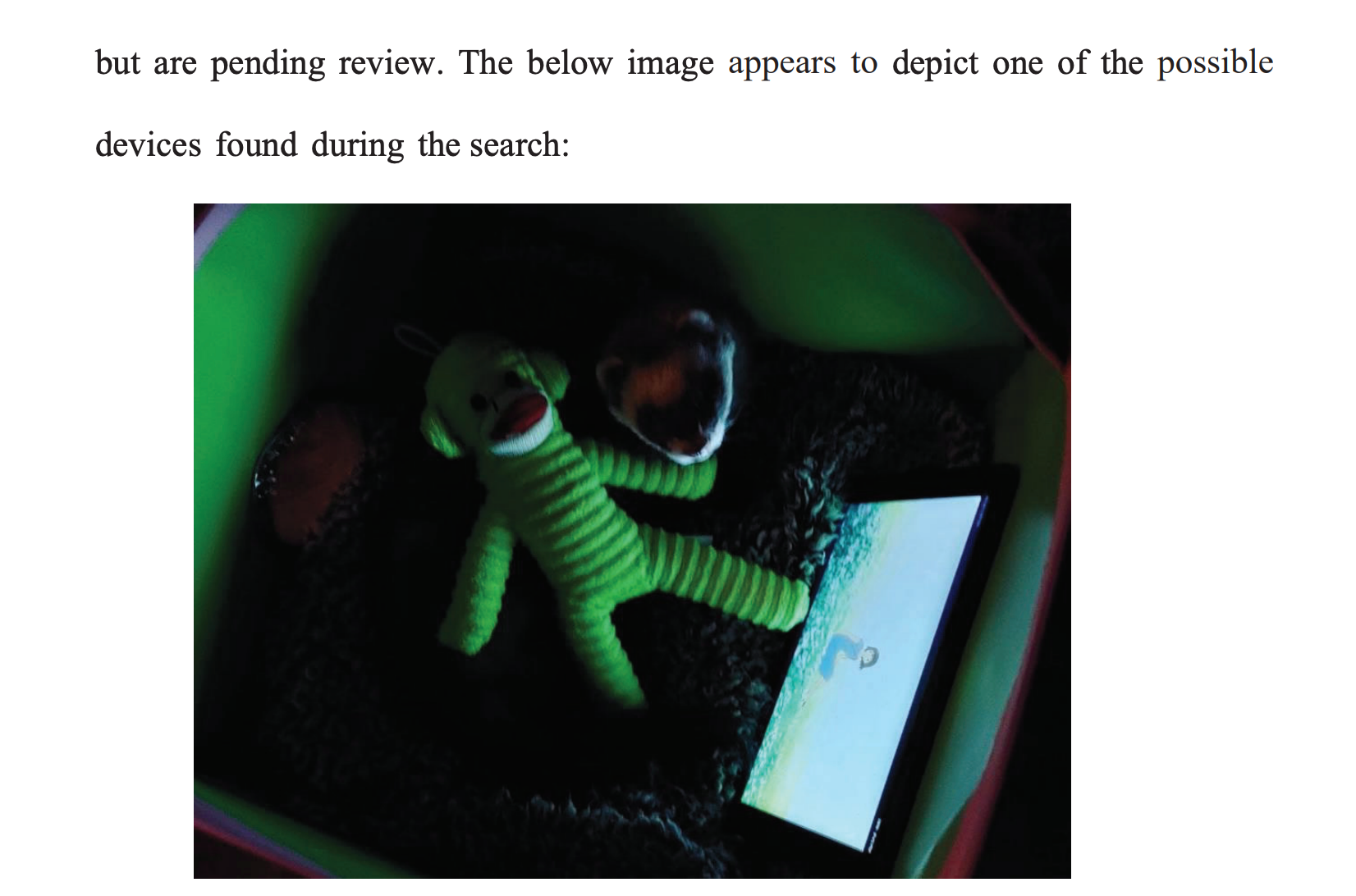

Documents say O’Connor possessed at least six AI created images, in addition to half a dozen ‘real’ CSAM images and videos. In an interview with law enforcement last Thursday, O’Connor told authorities that he “unintentionally downloaded ‘real’ images.” Court filings state he also told authorities that he would “report CSAM to Internet Service providers but still was sexually gratified from the images and videos.” A search of his house found a computer in his room and multiple hard drives hidden in a home’s vent. In a detention memo filed yesterday, the Justice Department says an initial review of O’Connor’s computer uncovered a 41 second video of a child rape.

404 Media has previously written about how the creation of AI-generated child sexual abuse material isn’t a “victimless” crime in part because real imagery of real victims can often be mixed in.

The Justice Department has stepped up its arrests of individuals possessing AI created CSAM images. In May 2024, Court Watch and 404 Media reported on the first of its kind arrest was made of a Wisconsin man who used “Stable Diffusion to create thousands of realistic images of prepubescent minors”

The U.S. Attorney’s office in Alaska, which is prosecuting the case, declined to comment outside of what was in the charging documents. A lawyer representing O’Connor did not immediately respond to a request for comment. On Monday, a federal judge ordered O’Connor be detained pending a further hearing on January 6th.